Part 2: How elastic are Amazon Elastic Load Balancers (ELB)? Very!

For those of you who have not read part 1 of this post, I recommend you take a look at the original ELB post where I briefly described my experience testing ELB to see how elastic it truly is before we roll it out into production. We are building a highly concurrent socket & websocket based system, and we expect upwards of 10k SSL (SSL is the important bit) new connection requests per second. We thought about building our own HAProxy + SSL termination solution, but to be honest the work in building an auto-scaling, resilient, multi-region load balancer is no mean feat, and something we’d prefer to avoid.

In order to understand the significance of the expected volume of 10k SSL requests per second, a single Amazon EC2 small instance can terminate approximately 25 2048bit SSL connections per second. If we were to terminate these connections ourselves using small instances, then we’d need at least 400 small instances running simultaneously with an even spread of traffic through a DNS load balancer to achieve this. Personally, I don’t like the idea of managing 400 CPUs (equivalent of a small instance) at each of our locations.

Hence we turned to ELB and started running our tests to see if it would scale as needed and deliver. One thing to bear in mind with ELB is that it does not officially support WebSockets, so in order to use ELB you have to configure it to balance using TCP sockets on port 80 and 443, which works fine except that the source IP is not available. I am told Amazon are working on a solution, but at present none exists, and it’s not such a big problem for us anyway.

With the help of Spencer at Amazon, who was incredibly helpful throughout the entire process (which lasted more than 2 weeks), we managed to get to a point where we knew the tests were accurate, any bottle necks that we found were indeed ELB and not caused by other factors, and we could replicate our tests easily. Spencer set up CloudFormation templates (awesome stuff) so that we could spin up the servers and the load testing clients in the same configuration each time, and I worked on building a simple WebSocket server, a WebSocket testing client, and a controller app (based on Bees With Machine Guns) so that we could spin up any number of load testing clients we needed, and start a progressive and realistic attack on the load balancers. On Github you can find my core repositories for the WebSocket load testing client and server, and the modified Bees With Machine Guns that does not rely on SSH connections as I found these to be unreliable when you spin up more than 10 load testing machines (we went up to 80 c1.medium).

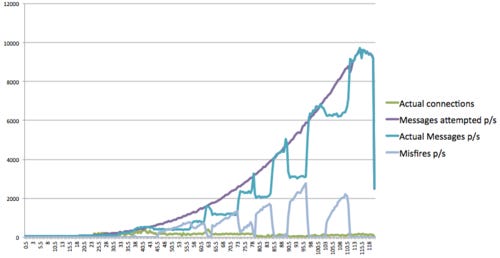

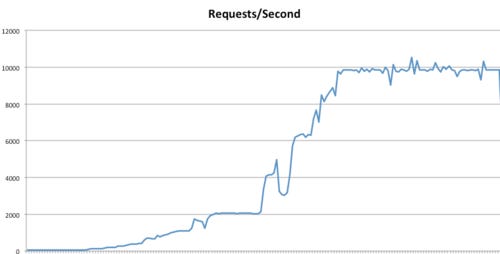

In summary, the results were very encouraging, but certainly not perfect. In my original post I alleged that ELB was flat lining at around 2k requests per second, unfortunately this was wrong, and was caused by me due to the way the testing clients were designed. Once I made the controller more reliable, made the load testing client not wait for connections to close, and replicated more realistic traffic growth, then when I ran the tests myself in my EC2 cloud I easily got to 10k requests per second, and so did Spencer (Amazon) with his tests using CloudFormation and the libraries described above. What we found is that ELB does seem to have unlimited scaling capabilities, however it doesn’t necessarily scale as fast as you want it to all the time. If you look at the graphs below, you will see plenty of dips where we would expect ELB to scale up horizontally to cope with more demand, yet it doesn’t until some time passess. Eventually ELB does catch up, but it’s worth bearing in mind that if you get spikes in traffic, latency will increase and potentially some connections may drop as ELB plays catch up.

My tests using ELB and EC2 showing expected versus actual in terms of new SSL connections per second

Amazon’s (Spencer) test on ELB where we expect a smooth bicubic curve

So to summarise:

ELB is pretty damn awesome and scales incredibly (pretty much limitlessly it seems)

ELB has its problems and is not perfect. It’s designed to scale as necessary, but your traffic growth may exceed ELB’s natural scale-out algorithms meaning you will experience latency or dropped connections for short periods.

We’re going to be using ELB as we believe it’s the best solution out there by a long stretch (we considered hardware load balancing & SSL termination, we considered Rackspace, GoGrid, and a bunch of others).

Amazon have been amazingly helpful throughout this exercise, way beyond what I could have expected.