The 'Impossible' Architecture: How Ably Maintained Zero Impact During the AWS US-East-1 Outage

When PubNub's CEO called our results impossible, he validated exactly what we built

Last Monday, October 20, AWS US-EAST-1 experienced a major outage lasting over 14 hours. For any infrastructure company running on AWS, this was a real-world stress test of architectural resilience.

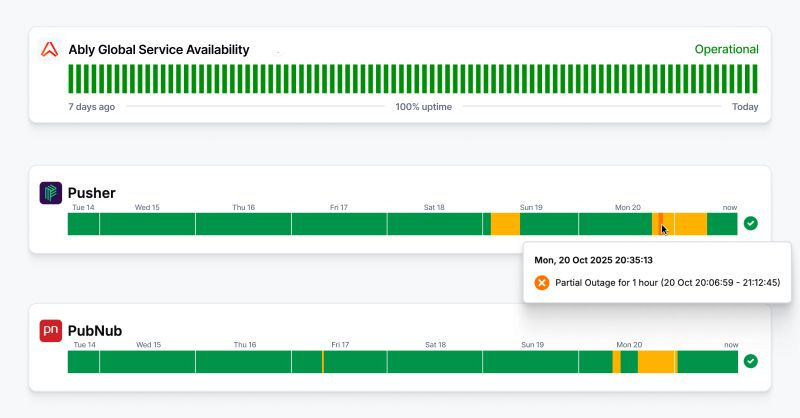

The results were stark:

Ably: Operational throughout. Zero customer impact. Zero downtime.

PubNub: 10+ hours of degraded service across two incidents.

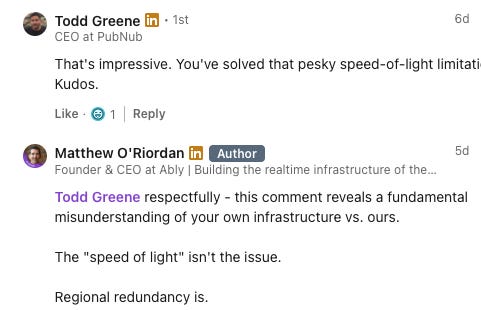

I expected we’d share technical post-mortems and move on. What I didn’t expect was PubNub’s CEO, Todd Greene, to publicly question our results, suggesting they were “impossible” and that we’d “solved that pesky speed-of-light limitation“

Those comments became the ultimate validation of years of architectural investment.

What Todd Called “Impossible”

Todd’s comment implied that maintaining operational status during a regional AWS failure defies the laws of physics. Let me explain what he’s calling “impossible” - it’s actually well-thought-through infrastructure design.

The core principle: Regional redundancy.

Ably operates TWO datacenters within the US-East region:

AWS us-east-1 (Northern Virginia)

AWS us-east-2 (Ohio)

The network latency between these regions from major East Coast cities:

New York: 5-10ms

Boston: 8-12ms

Washington DC: 7-10ms

Atlanta: 10-12ms

When us-east-1 became unavailable, we failed over to us-east-2. Our monitoring showed a worst-case median latency increase of 12ms (anyone can easily verify this is possible and does not require any light speed violations 🤭).

That’s basic geography, not bending physics.

Our blog post on how Ably’s multi-region architecture held up during the US-East AWS outage has the full technical details including performance graphs.

What Happened at PubNub

According to PubNub’s public status page, their experience was very different:

Incident 1: October 20, 07:06-23:48 UTC (16 hours 42 minutes)

“Elevated latencies and errors for multiple services in US-west and US East”

Incident 2: October 20th, 2025 at 07:06 UTC (2 hours 1 minutes)

“Increased latency and errors observed in US-West”

Total: Over 16 hours of documented degraded service.

Their blog post about the incident explained why: “The physical distance to other regions was going to increase latencies.”

What this reveals: PubNub doesn’t appear to have multi-datacenter redundancy within US-East. When us-east-1 failed, they had to route traffic across the country or globe - hence the multi-hour latency issues.

This Isn’t Infrastructure 101

I want to be clear: building truly resilient global infrastructure is hard. This isn’t “infrastructure 101” - it’s the result of 10 years of deliberate architectural choices and significant investment.

What we have built at Ably:

Multi-region architecture with no single points of failure:

Each region operates independently

Regions communicate peer-to-peer (no central hub)

Failure in one region doesn’t cascade to others

700+ edge locations globally via CloudFront

Connection state recovery:

2-minute window to seamlessly resume after disconnections

Messages queued on servers, not just client-side

Zero message loss during network interruptions

Automatic reconnection without application code

SDK resilience:

Automatic failover to healthy datacenters

Multiple fallback hosts tried in sequence

Connection state preserved during transitions

Transparent to end users

Ably engineering philosophy: “Do the hard infrastructure work so our customers don’t have to”

This philosophy and investment is clearly evident in our Four Pillars of Dependability.

The Broader Pattern

The AWS outage wasn’t an anomaly. Looking at the last year of public incident data (October 2024 - October 2025):

Ably (status.ably.com):

5 customer impacting incidents

531 minutes of degraded service

PubNub (status.pubnub.com):

26 customer impacting incidents (4.2x more than Ably)

6,045 minutes of degraded service (10.3x more than Ably)

Pattern of Presence service failures

Ably is not claiming perfection, but we are demonstrating that architectural choices have real consequences for reliability.

(Source: Reliability By The Numbers: Ably vs Pusher vs PubNub incident analysis)

Why This Matters to Customers

This is exactly why customers migrate from PubNub to Ably.

To date, our enterprise customers, who migrated from PubNub to Ably in search of a better service, have generated a whopping $7.5m of revenue.

We’re not aware of any enterprise customers who’ve migrated in the opposite direction.

These customers consistently cite similar reasons: They needed reliability guarantees they could actually depend on.

One recent enterprise customer (we can’t name them - PubNub threatened legal action when they tried to publish a case study) reported:

65% reduction in operating costs

95% reduction in waiting room issues related to realtime

100% elimination of ghost-check-in times

99% reduction in app-closing errors

These metrics aren’t about marketing claims. They’re about real-world performance under real-world conditions - including infrastructure failures.

To Developers: Verify Everything

I’m making bold claims. Don’t trust me - verify the evidence:

1. Status Pages

2. Run Your Own Latency Test:

Ping from US locations to us-east-1 and us-east-2 and see the latency differences

Here’s some data and a handy script you can run yourself to reproduce this

3. Review Architecture Documentation:

Ably Four Pillars: https://ably.com/four-pillars-of-dependability

Ably platform architecture: https://ably.com/docs/platform-architecture

PubNub architecture: .. tumble weed .. (I genuinely cannot find this information)

Transparency Makes Everyone Better

The more transparent we are about how we design systems and how they perform under real conditions, the more we can all learn from each other. There’s plenty of space for multiple strong companies in the realtime infrastructure market.

The AWS outage provided a rare, public test of every platform’s architecture. The results are documented on both our status pages.

Ably maintained operational status. PubNub experienced 10+ hours of degradation. That’s not impossible. That’s the difference between architectural choices made years ago.

What’s Coming Next 👊

This incident reminded me that we should talk more about the “impossible” things Ably achieves.

Over the next week, I’m publishing a series on things our competitors probably think are impossible:

Friday: Scaling native WebSockets to billions of connections daily

Why we chose the hard path instead of easier alternatives

What this enables for customers

The engineering investment required

Monday: Guaranteeing message ordering and exactly-once delivery in globally distributed systems

Why most platforms can’t offer these guarantees

How our architecture makes it possible

What this means for building reliable applications

Wednesday: Transparent pricing that doesn’t penalize your most successful customers

Why transaction complexity creates billing surprises

How consumption-based pricing aligns with customer success

The difference between simple marketing and simple reality

Each post will show what’s actually possible when you do the hard engineering work.

See you on Friday!

Matt

Technical co-founder and CEO of Ably